What are health checks in OpenShift

Health checks are required to ensure that application is resilient so application can be restarted without manual action. Such health checks can be configured using probes in openshift. Kubernetes provides two type of probes, readiness probe and liveness probe.Liveness probe

Liveness probe checks that application is running and handles those situations when there is any issue with application.Readiness probe

Readiness probe check whether application is ready to handle the traffic.Another benefit of having these health checks is that you can restart or deploy application with zero downtime when you are having minimum 2 pods running for your application. In this case it will not bring all the pods down.

Supported health check types

You can configure the health check of below type for both of the above probes.- HTTP Health Check It will call a web URL and if it returns the HTTP status code between 200 and 399 then it will consider as success otherwise failure.

- Container Exec Using this option we can execute command inside container and if it return 0 as exit code only then it will be considered as success.

- TCP Socket Here, it tries to open the socket to container. If it can establish connection then it will consider as success otherwise failure.

Configuring health checks

These health checks can be configured in multiple ways, like using Openshift console UI or using the templates.Using Openshift console UI

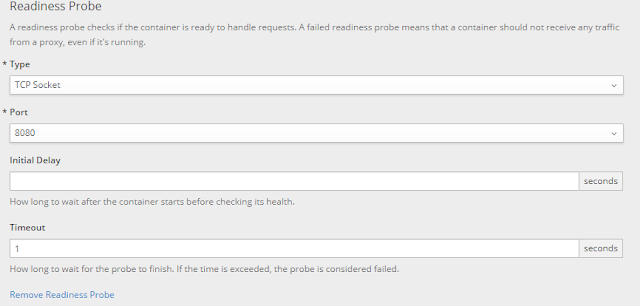

To add or edit the health check you need to login to openshift console and go to your deployment config using the option "Application/Deployments/<deployment_name>".In below screen you can provide the readiness probe details.

In below screen you can provide the liveness probe details.

Finally save the changes and then you can see those health check on your deployments under configuration tab.

Using templates

You can directly add or edit the blocks for readiness and liveness probes in templates. Then you can execute those templates to reflect your changes to deployment configurations.These changes you need to add in your deploymentConfig template in containers section.

Readiness probe

{

"kind": "DeploymentConfig"

"spec": {

"template": {

"spec": {

"containers": [

{

"readinessProbe": {

"tcpSocket": {

"port": 8080

},

"timeoutSeconds": 1,

"periodSeconds": 10,

"successThreshold": 1,

"failureThreshold": 3

},

}

Liveness probe

{

"kind": "DeploymentConfig"

"spec": {

"template": {

"spec": {

"containers": [

{

"livenessProbe": {

"tcpSocket": {

"port": 8080

},

"timeoutSeconds": 1,

"periodSeconds": 10,

"successThreshold": 1,

"failureThreshold": 3

},

}

Comments

Post a Comment